Real-time Robot Control with Leap Motion

Using Leap Motion Sensor to Control a Robotic Arm in Real-time.

One of the first robotics projects I have undertaken during my masters is to come up with a way to interact with a robotic arm, also called as manipulator without using a hand-held controllers. Typically, a robotic arm is controlled with a wired or wireless teach pendant. It will allow an engineer to move the arm to any configuration possible by the arm, save the positions and joint values. These values can then be used to replay any number of times to achieve repeatability of a given task to the robot. There is a learning curve to operate this teach pendant alone.

Some modern robotic-arms can be guided with hands by physically exerting force on the arm to achieve a specific configuration. Literally hand holding them as they learn.

What if the robot can watch our hands instead and learn? 🤔

This was the base idea of this project. I had to learn some of the basic math of the robotic arms before I attempt to implement any new process of controlling the arm itself.

I'll try to simplify as much as I can here in this article without going into all the tiny details.

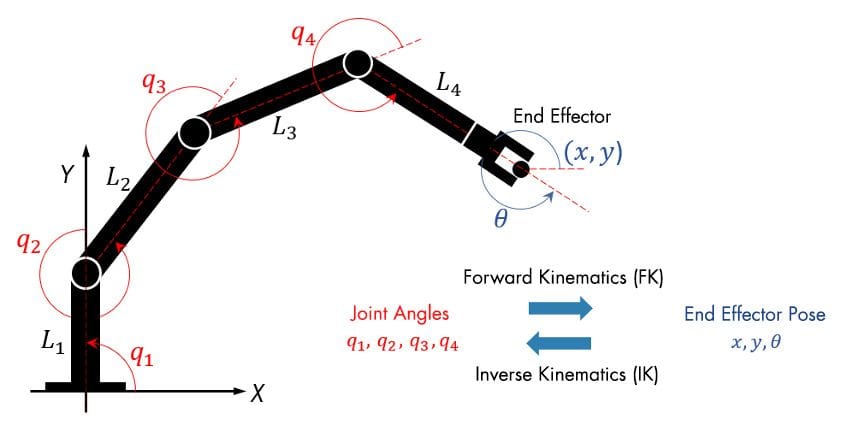

Firstly, I had to understand the term DoF, Degrees of Freedom. Each robotic arm consists of a specific number of DoF. If a robot can only move in two axis, we say it has 2DoF. Similarly a 3DoF robot can move in 3 axis X,Y,Z. If the robot has a spherical joint(wrist), it can also rotate it's tip in 3 axis. So, we can say this particular robot has 6DoF.

Secondly, I had to understand the concept of Inverse Kinematics(IK). It is the math that solves for the joint angles needed for the robot to move to a specific position.

In the above figure, notice that there are 4 joints (q1,q2,q3,q4) and an end effector that can rotate in 1 axis. So, it is a 5DoF robotic arm.

If we know the X,Y,Z coordinates of the end-effector, we can calculate the required joint angles for the robot to achieve the position using IK.

Next, I need to find a way to track the human hands and get the X,Y,Z values so I can feed the values to the IK equations and solve for the required joint angles of the robot.

Here comes the new Leap Motion Sensor.

I spent quiet a time with this sensor playing with the apps that were provided along with the sensor. It was a fun experience. The sensor is inexpensive and available for consumers. The important aspect of this sensor is that, developers can access it's API to integrate with any of their projects. So, I chose this for my project. Leap Motion Sensor consists of stereo cameras and LED sensors to accurately detect the hand structure and track the motion if each finger with the precision of 1mm.

The robot I chose for this project is OWI Robotic Arm Edge. It is also an expensive robotic arm toy. It comes unassembled and it takes quite a time to assemble the parts and build the robot. I would recommend it to any enthusiasts who like the process of assembling.

OWI also comes with it's USB interface allowing us to control through a PC. Using a simple java code, we can programmatically control the OWI robot while passing the end effector coordinates.

RobotArm owi = new RobotArm();

//Move to home position

owi.moveToZero();

//Move the end effector to (100,100,100)

owi.moveTo(100,100,100);Now, grab the hand coordinates from Leap Motion sensor Java API. The Java API I have used might have been deprecated and replaced with a newer SDK or similar. But, here is how we can access the coordinates the tip of our index finger when hovered on the sensor.

for (Finger finger : frame.fingers()) {

Hand hand = frame.hands().frontmost();

if (finger.type() == Finger.Type.TYPE_INDEX) {

int pinch = (int) hand.pinchStrength();

for (Bone.Type boneType : Bone.Type.values()) {

Bone bone = finger.bone(boneType);

if (bone.type() == Bone.Type.TYPE_DISTAL) {

x1 = (int) bone.center().getX();

y1 = (int) bone.center().getY();

z1 = (int) bone.center().getZ();

owi.moveTo(x1, (-1 * z1), y1);

}

}

}

}

This code block must be in a listener class that constantly executes for each video frame detected by the sensor. A complete class would look like the following.

class SampleListener extends Listener {

RobotArm robotArm;

int flag = 0;

public void onConnect(Controller controller) {

robotArm = new RobotArm();

System.out.println("Connected");

controller.enableGesture(Gesture.Type.TYPE_SWIPE);

}

public void onFrame(Controller controller) {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

Frame frame = controller.frame();

if (frame.hands().count() == 0) {

if (flag == 0) {

System.out.println("Put your hand to start");

}

if (flag == 1) {

System.out.println("Closing device");

robotArm.moveToZero();

robotArm.moveBack();

robotArm.showAngles();

robotArm.close();

System.exit(0);

}

} else {

flag = 1;

}

int x1, y1, z1;

for (Finger finger : frame.fingers()) {

Hand hand = frame.hands().frontmost();

if (finger.type() == Finger.Type.TYPE_INDEX) {

int pinch = (int) hand.pinchStrength();

for (Bone.Type boneType : Bone.Type.values()) {

Bone bone = finger.bone(boneType);

if (bone.type() == Bone.Type.TYPE_DISTAL) {

x1 = (int) bone.center().getX();

y1 = (int) bone.center().getY();

z1 = (int) bone.center().getZ();

robotArm.moveTo(x1, (-1 * z1), y1);

}

}

}

}

}

}

With this setup, I was able to track the motion of my index finger using the Leap Motion Sensor, utilize it's Java SDK and translate the coordinates to joint angles, feed it to the OWI robot using it's USB interface. The idea was successfully tested and the OWI robotic arm reacted to the motion of my index finger and constantly adjusted it's joint angles in real-time to mimic my hand motion. Thus achieving real-time control and guidance without touching any controllers.

This was an exciting and fun project during my first semester in masters education. Observing the robot move mimic my hand has given me a satisfying reward and joy for all the background work that was put in to this. And there was a lot of takeaways as I came to learn about multiple concepts and technologies involved.

This work was later presented at American Society for Engineering Education(ASEE) Conference held at North Eastern University, in 2015. Here is the link to the research paper.